AI Part II: AI security != classical security

It should be obvious

In part I of this series, I left a couple threads of discussion dangling and unaddressed: how to best secure AI installations and how to protect ourselves from AI enhanced attacks. I will pick those up here. However, I was recently asked to talk on a panel on AI security, framed by the question, “Is AI Security any different than classical security?” To which my response will be, “of course it is, you dolt”, but that is too harsh. It’s an interesting question, goes to the first dangling thread, and thinking about this is where I’d like to begin.

I’d like to draw an analogy between ‘real life / reality’ and AI. Something that I’ve always found fascinating about the sciences is the hierarchy of rules each domain of science creates. For example, we are aware that at the most foundational level of understanding ‘real life’ is the study of physics1. Physics produces any number of theories and laws, that taken together, form a shockingly accurate model of how things work. (I’m ignoring that even the best models, relativity and quantum mechanics are incomplete and may be somewhat incompatible). I love the fact that every few years someone thinks of some exquisitely subtle and original test of relativity only to have its predictions validated to yet more decimal places.

If we move to the next layer of organization, chemistry, we find yet another body of rules and laws that largely without reference to physics allow us to understand, explore, and predict chemical reactions with again, astonishing accuracy. Here we can look to something like Avogadro's Law which, dating from 1810, clearly doesn’t rely on modern physics2.

Chemistry naturally gives rise to biological organization. Once again we find an emergent set of new rules that govern biological processes, perhaps most recognizable of which is Darwinian evolution. Essentially as the ‘hard’ ontology of real life coalesces at each level, we identify rules that allow us to make predictions and analyze the activity of that level, with little or no reference to the earlier level.

It should be no surprise that at this point we can look to psychology and sociology (as well as all the human activities from architecture to finance) as the proliferation of laws, theories and rules we use to manage human society. While there is a cottage industry of intellectual grifters trying to tie this fourth domain to the third of biology, as rational beings we, gentle readers, can ignore them. Though, to be fair, it’s hard to explain our modern political dilemmas without reference to the vestigial lizard brain of our brainstem.

The analogy I would like to make is that AI models have emergent features that require a new set of cybersecurity controls and analysis, just as new domains of science produce their own sets of laws and rules. AI models have systems, and operate on networks, both of which are amenable to classical information security. Yet AI models are well known for having additional emergent properties as a consequence of their complexity, and perhaps the internal hierarchy of systems within them (e.g., token embeddings, attention heads, or agentic behaviors)3.

This should be no surprise to the cybersecurity professional. While network-based intrusion detection and monitoring are necessary for any environment, once application security is considered, we find an entirely new set of security controls and analyses are necessary. The situation is similar for AI, except unlike most applications, it is nearly impossible to predict what an AI will do, or attempt to do, in response to a prompt. Indeed, the scope of possible LLM prompts is essentially unlimited - the concept of “entry validation” breaks down almost completely.

I suspect this analogy, though helpful, may not be perfect. I use it here as illustrative. Unlike formal mathematics where difficult problems are often solved by showing they’re identical to more tractable problems in different mathematical domains, I don’t see the analogy proposed here as a problem-solving tool. Rather, I hope it is a question-generating engine4.

What are some of the emergent properties of AI? We could start with the well-known. There is in-context learning, where LLMs can learn and perform entirely new tasks just by seeing a few examples in a single prompt, despite never being explicitly trained for those tasks. There's also compositional reasoning, where models combine concepts to solve multi-step problems or invent analogies that go beyond their direct training data. Some of the most advanced systems have even exhibited deceptive or goal-seeking behaviors, finding unintended strategies to achieve a goal that resemble a kind of agency. Internally, a model’s neural networks can demonstrate self-organization, spontaneously encoding abstract concepts like grammar or geography. Yet for all their sophistication, they can also show a surprising adversarial brittleness: where a small, carefully crafted input can radically change an output in a way that’s impossible to predict from the training data. The systems also engage in sociotechnical feedback loops, reshaping their environment in ways that alter the very data they learn from in the future.

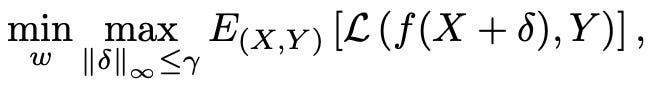

Googling any of these will produce a veritable candy store of references sure to rot your teeth. The question for us as security professionals is, for each emergent behavior, are there specific controls (classical or novel) that should be deployed in the interest of securing the system? Just as application security is a domain of specialized knowledge and practice, do each of these warrant the same? Is there a logical grouping whereby we can show any small set of them share enough to warrant a common approach? This is a serious challenge, if we take as an example one professional article on adversarial brittleness, we find5,

Whoo boy. As the authors state in the abstract, “Specifically, we inject noise variation to each feature unit and evaluate the information flow in the feature representation to dichotomize feature units either robust or non-robust, based on the noise variation magnitude.” This begins to sound like it could be amenable to some classical approaches to monitoring for anomalies, but it seems clear that we’re a long way from a formal approach to address adversarial brittleness.

I am using this example not merely to point out that, clearly, the long tail of my time as a math major wasn’t that long, but to illustrate that the nuts and bolts of AI remain a research program, rather than something trivially secured through classical information security practices. The challenge of achieving what NIST calls ‘explainability’ is profound. “Explainability refers to a representation of the mechanisms underlying AI systems’ operation, whereas interpretability refers to the meaning of AI systems’ output in the context of their designed functional purposes.6”

“Emergence,” while used across many domains and fields seems novel to cybersecurity. We should also consider that the entirety of AI’s emergent rules may never achieve the crisp predictive power of chemistry or biology; they may remain probabilistic patterns. Nevertheless, this has me wondering where else in cyberspace we might see emergent behavior or laws at play. Of course this would probably require giving a better definition of emergent behaviors appropriate for the cyber context.

In general terms I think of emergence as the phenomenon where a complex system’s behavior arises from the interactions of its components in ways that couldn’t have been predicted by looking at the individual parts alone. In essence, the collective dynamics of the system produce new, higher-level functions that can't be directly deduced from its underlying rules.

A little searching online produces the following, primarily from cognitive neuroscience and systems biology, as the key attributes of emergence:

novelty: The behavior is qualitatively new relative to the individual components.

system-level origin: It results from interactions, feedback loops, or self-organization within the system.

non-linearity: Small changes in inputs or structure can produce disproportionate or unexpected effects.

dependence on scale: Emergent properties often appear only when the system reaches a certain size or complexity. (Though I wonder what the cellular automata folks think about this last one).

All of which seem related to our discussion of AI and emergence. While AI and emergence have a large literature, it appears to be far more modest where cybersecurity is involved. ACM holds a paper, for example, on the intersection of emergence and cybersecurity from 2014: “Emergent behavior in cybersecurity” which discusses a number of examples7. I haven’t had time to do a proper literature review, but given the significance of AI, it strikes me as a topic worth diving further into for cybersecurity professionals8.

Finally, I’d like to turn to the other hanging chad from part I, “how to protect ourselves from AI enhanced attacks.” There are two dimensions to this, most obviously the use of AI to orchestrate or manage attack chains, and the other attacks resulting from the emergent behaviors discussed above. It’s fascinating to think about these as two sides of the “things we know” vs “things we don’t know” dichotomy.

For the former, the use of AI as a dynamic agent of attack, we know this is taking place. We also have known, both in general and with some specificity how probes, account compromises, and direct attacks on systems are executed. I assume that with an AI agent it is possible to accelerate the speed and nimbleness of such traditional methods, particularly with the opportunity to adapt malware in near real-time. What is less clear to me (though those with more familiarity with the topic may have more to say), is if these attacks are in some fashion novel: are they creative in substance and not merely “faster and better”? That is, do they stop being traditional attacks and give rise to new categories of badness?

For the latter, involving emergent behaviors, we should appeal to what I discussed earlier, epistemic humility. We should acknowledge that as AI systems give rise to emergent properties, the very nature of these imply they are novel and unpredictable, and thus represent a very difficult threat to protect against. However, even my simple list above provides a starting point9. That is, the AI world is fascinated by these emergent behaviors and are quick to catalog them and while challenging, they represent a starting point for our risk analysis. Epistemic humility is always required and present, but the advance of knowledge marches onward, carrying us with it.

We also need to remember that AI may (or will) be used in more and more layers in your software stack. For example, it seems reasonable to have a custom trained LLM simply to handle arbitrary prompts to ensure you can identify and block prompts with a malicious intent. But clearly you’ve now introduced an entirely new vector for an attack, where prompt-poisoning seems a particular vulnerability. Alternatively, I can imagine someone playing the long game with spam and phishing. Millions of innocuous messages could be created solely to induce model drift or feedback attacks, thereby training the anti spam engines that certain language patterns are safe, essentially causing it to let its guard down. It may seem that this is no different than any other context - every application contains vulnerabilities - but there are few other situations where it is possible to change the functionality of an application through mere use. Just as social media normalizes previously abhorrent beliefs, causing ordinary people to display emergent antisocial behaviors, so are AIs susceptible to similar reinforcement dynamics.

Having completed this little adventure, a few things strike me as worth stating as a conclusion. The first is that we should establish a taxonomy of emergent AI behaviors, prioritizing adversarial testing and funding explainability research. Each node in that taxonomy is worthy of a risk analysis, and possibly novel cybersecurity controls. Second, I would urge the security community to share empirical findings, similar to how vulnerability databases track classical exploits10. I see some elements of this in online discussion forums, but these efforts, while eminently practical, are too parochial and lack any cohesion. It would make a terrific study for someone to do a meta-analysis of how different institutions are securing AI and cross referencing this with the technical literature on emergence. Finally, it’s clear that there is an urgency to the challenges I’m discussing. I see the necessity of adaptive, interdisciplinary approaches (security, AI research, cognitive science) to stay ahead of rapidly evolving threats.

If the word theology popped into your mind, my condolences.

Of course, as each field matures and advances, it is more likely to leverage knowledge from a more fundamental layer in order to answer why some rule works, instead of merely answering what happens.

On the other hand, unlike natural science layers, AI is an artifact, not a naturally evolved hierarchy. Any “emergent behavior” may be statistical quirks rather than truly independent laws.

The first example I can think of is using complex numbers to solve problems in trigonometry.

NIST AI RMF 1.0.

The paper isn’t publically available, but this overview slide is: https://xu-lab.org/wp-content/uploads/2021/01/hotsos-poster-emergent-behavior.pdf.

I have this nagging feeling that once I spend more time researching this, I’ll uncover an entire world of research and publications dedicated to the topic.

In-context learning, compositional reasoning, deceptive or goal-seeking behaviors, self-organization, adversarial brittleness, and sociotechnical feedback loops. It’s worth pointing out that the literature is filled with others, these are merely the most commonly referenced.

Naturally, some efforts in this space exist, though I’m unaware of anything focused specifically on emergent behaviors.